PTSM: Pharmaceutical Technology Sourcing and Management

- PTSM: Pharmaceutical Technology Sourcing and Management-09-06-2017

- Volume 12

- Issue 9

Evolution of Analytical Tools Advances Pharma, But Challenges Remain

Technology improvements in analytical testing tools have helped increase understanding of molecules and speed up development processes.

Analytical testing, which deals with characterization of raw materials and finished dosage forms, plays an important role in pharmaceutical manufacturing and all phases of drug development. In this roundtable article, industry experts discuss how advances in analytical testing tools have helped address challenges in pharmaceutical analysis. Industry experts include: Guillaume Tremintin, market area manager, Biopharma, Bruker Daltonics; Lisa Newey-Keane, marketing manager, Life Science Sector, Malvern Instruments; Gurmil Gendeh, marketing manager for Pharmaceuticals at Shimadzu; Kyle D’Silva and Simon Cubbon, both Pharma and BioPharma marketing managers at Thermo Fisher Scientific; and Geofrey Wyatt, president of Wyatt Technology Corporation.

Evolution of analytical tools

PharmTech: How have the tools for analytical testing of small molecules and biologics evolved over the past 10 years?

Newey-Keane (Malvern): Developments in analytical instrumentation for pharma have largely been addressing three key trends over the past decade. The first is the significant rise in small-molecule generic drug development. This development has increased the requirement for instruments that offer performance-relevant measurements and/or high informational productivity for deformulation and the demonstration of bioequivalence (BE).

The second important trend is the shift towards continuous manufacture (CM). The potential ease of scale-up of continuous processes and the ability to file on the basis of full-scale experimental data makes CM particularly interesting for pharma, but its realization relies on effective monitoring and automated control. Here, the ongoing transition of core analytical techniques to fully integrated, online implementation is enabling rapid progress.

Finally, in biologics, we’ve seen growing awareness of the importance and power of orthogonality when it comes to probing the complex nature of the proteins. Identifying the optimal set of biophysical characterization techniques is crucial to comprehensively elucidate critical aspects of behavior, such as stability. Significant progress has been made in this area, and there is now growing awareness of how to complement traditional techniques with newer/less well-established ones, such as differential scanning calorimetry (DSC) and Taylor dispersion analysis (TDA), to maximize understanding and add value.

Wyatt (Wyatt): The tools have gotten more sophisticated, specific, and in some cases, more expensive too. In the never-ending quest to learn more about small molecules and biologics, the FDA has encouraged the use of orthogonal testing techniques. As a result, greater insight and understanding of these molecules has been possible.

Tremintin (Bruker): What we have seen evolve is the increased usage of high-resolution accurate-mass instruments (i.e., moving from instruments where you have a limited level of insight to higher resolutions that gives users the ability to confidently determine the identity of a target molecule). On an ultrahigh-resolution quadruple time of flight (QTOF) mass spectrometer (MS), such as the Bruker maXis II, the accurate-mass and the precise relative intensities of the isotopes allows derivation of a molecular formula or a protein monoisotopic mass. On the Fourier transform ion cyclotron resonance (FT-ICR) MS side, such as solariX XR, one can even resolve the hyperfine structure of the isotopic pattern and directly read the molecular formula of the ion being measured. Building confidence and getting deeper insights through high resolution has been the biggest change.

Cubbon (Thermo Fisher Scientific): Two key areas that have contributed to accelerated evolution of biologic characterization have been the widespread adoption of high-resolution accurate mass (HRAM) spectrometry and advancement of software tools that deliver meaningful answers to non-expert users. A decade ago, characterization of biologics demanded expert mass spectrometrists to both acquire and interpret data, typically done manually over days and weeks. Instrument variability and difficulty of operation meant that a high degree of skill was required for the results to be meaningful. Today, platforms such as the Thermo Scientific Q Exactive BioPharma with Thermo Scientific BioPharma Finder software have democratized mass spectrometry. Software tailored for drug quality attribute monitoring easily transfers this data to actionable knowledge with automated reports, reducing the demand for specialist operators. As well as being easier to use, the systems are also smaller and more affordable than a decade ago. Consequently, we see greater adoption of these technologies, not just in research laboratories, but also further down the drug pipeline in development; in chemistry, manufacturing, and control (CMC); and even in quality control (QC) environments as biologic manufacturers seek to implement multiple attribute monitoring (MAM) methods using HRAM mass spectrometry to replace a host of chromatographic testing methods performed for QC lot release.

Gendeh (Shimadzu): We have seen an increasing adoption of ultra-high performance liquid chromatography (UHPLC) and sub-2-micron column chemistries for both small and large molecule analysis that allows faster, better, and higher resolution chromatography. As both small molecule and large molecule become more complex, we have also seen the development and increasing adoption of higher resolution technologies, for example, two-dimensional liquid chromatography (2D-LC) and liquid chromatography combined with mass spectrometry (LC/MS/MS). High-throughput, automated, and streamlined sample preparation that is fully integrated into analytical workflows has been adopted. Moreover, data systems have been adopted that not only integrate LC and LC/MS but the complete portfolio of analytical instrumentation, including spectroscopy, with seamless data analysis and reporting that are in full compliance to existing standards.

Recent advances

PharmTech: What are the most significant recent advances in analytical testing tools that have helped speed up and improved the success rate of drug development?

Tremintin (Bruker): The most significant recent advances have been high-resolution measurements that enable users to accelerate their protein characterization tasks. Because of the simplicity of the assay, customers can perform measurements upstream in their development. They have better chance of identifying problems on a lead candidate earlier in the development, which avoids spending too much money down alleys that will lead to failure or costly remediation. Users of maXis II have been able to use this type of measurement to detect incorrect glycosylation sites very early in the development at a stage when they are still able to send the molecule back to the molecular engineering department to optimize the molecule before advancing it any further. There also is a renewed interest in MALDI ionization to perform simple characterization tasks such identity testing of a recombinant product without the need for a chromatography modality.

D’Silva (Thermo Fisher Scientific): When developing biologics, the biopharmaceutical industry look to achieve full structural insight into their candidate molecules, with high confidence, as fast as possible. They look to fail candidates fast. Technologies such as ion exchange chromatography using pH gradients were first proposed by Genetech in 2009 for profiling of therapeutic protein variants. Since then, unique patented products such as Thermo Scientific CX-1 pH Gradients Buffer Kits have led to a 10-fold reduction in analysis time over conventional salt gradients, dramatically accelerating drug development time.

Understanding the primary structure of a biologic candidate is critical step performed early in the development process, but also at every stage after. This was traditionally a labor-intensive and time-consuming, 24-hour process to prepare the sample for analysis. Today, we see biopharmaceutical developers able to perform protein sample preparation in 45 minutes and achieve 100% coverage when mapping their drug candidates. The software technology for analyzing peptide digests has also increased its throughput dramatically. The time for biopharmaceutical comparative modification data interpretation can be taken down from two weeks to one day.

Gendeh (Shimadzu): Mass spectrometers have definitely seen the biggest advances in terms of speed, resolution, and sensitivity gain over the past decade. The Shimadzu triple quadrupole mass spectrometer LCMS-8060, for example, delivers high sensitivity, fast scan speed, and fast polarity switching that enable collection of high-quality data and information. Having these features allows big pharma to fail candidates early, fast, and cheaply in preclinical studies, thus accelerating the pace of drug discovery and bringing safer and more efficacious drug candidates to market faster.

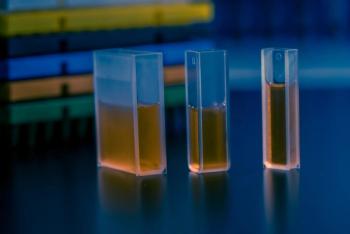

Wyatt (Wyatt): The tools we know best are those that we develop. Our DynaPro Plate Reader II (DynaPro PRII) is but one example. Until it came into being, the only way to make a dynamic light scattering (DLS) measurement was to use one-at-a-time, batch measurement techniques in small cuvettes. The measurement required an operator to prepare a sample, pipette it into a cuvette, then place the cuvette in the instrument, close a lid, make a measurement, open the lid, remove the cuvette, and, as the saying goes ‘lather, rinse, and repeat.’

The DynaPro PRII has taken industry standard well plates of 96-, or 384-, or 1536-well formats and enabled them to be used as massively multiple cuvettes. An entire plate of hundreds or thousands of small molecules or other biologics can be pipetted into the wells, the well plate inserted in the instrument, and … that’s it. The technician can walk away and do other work while the instrument makes DLS measurements, ramps temperatures, etc. In fact, the DyanPro PRII enables researchers to perform tasks that were heretofore impossible. The daunting challenge of, for example, screening thousands of samples at different pH levels, or investigating promiscuous inhibitors, is no longer an impediment to making huge numbers of DLS measurements.

Newey-Keane (Malvern): Morphologically-directed Raman spectroscopy (MDRS) is a relatively new technique that combines the capabilities of automated imaging and Raman spectroscopy. It delivers particle size and shape data, along with chemical identification for individual particles in a blend, making it a powerful tool for deformulation as well as many other pharmaceutical applications. MDRS data was recently used to establish in-vitro bioequivalence, in lieu of clinical trial data, in the approval of a generic nasal spray application by Apotex, highlighting its value in the time-critical world of generic drug development.

A relatively new addition to the biophysical characterization portfolio is TDA, which provides ultra-low volume, solution-based molecular size measurement. Technology that combines TDA with Poiseuille flow for relative viscosity assessment has been shown to be valuable for robustly identifying drug candidates with a poor developability profile early in the drug development pipeline, thereby saving time and money. TDA extends label-free measurements into highly complex solutions and is able, for example, to detect and size monomeric insulin, even in the presence of its hexameric form.

Finally, our latest technology launch exemplifies the trend of enhancing instrumentation to make it easier for researchers to access robust data for secure and effective decision-making. The MicroCal PEAQ-DSC is a fully-automated differential scanning microcalorimeter that extends the use of DSC-the ‘gold standard’ for protein stability assessment-throughout the development cycle. Key features include: unattended 24-hour operation; automated data analysis; built-in automated cleaning; and self-validation protocols.

Data interpretation

PharmTech: With so many features and functions on new analytical instruments (such as capabilities to measure various parameters using one equipment), does the industry or the people using them actually understand the concept behind these measurements, how the results are obtained, and how to interpret them correctly?

Wyatt (Wyatt): This is a frightening and very realistic possibility. Too often vendors sacrifice ease-of-use for ‘black boxes’--instruments that simply produce numbers. The researcher has little indication of whether the numbers mean something or whether they may as well be random because there is something wrong in the measurement itself or with the sample preparation. At Wyatt Technology, our customers come to Santa Barbara, CA to participate in Light Scattering University, a course that demystifies light scattering by teaching some of the theory, practice, sample preparation, and data interpretation. The result is that we create a cadre of customers who have an understanding of the powers-as well as the limitations-of the measurements that the instrument makes.

Newey-Keane (Malvern): Given productivity pressures, well-documented skill shortages, and the ever-increasing number of analytical techniques routinely deployed, it’s important to consider what level of understanding is actually optimal. For example, if you are carrying out routine QC, then a highly automated, standard operating procedure-driven, modern instrument with integrated method and data quality checking software can eliminate any need for understanding or interpretation, and will effectively remove any subjectivity in data analysis. Techniques such as laser diffraction have already matured to this point. Indeed, it could be argued that for many industrial applications, modern systems can now be more effectively differentiated on their ability to make their performance accessible than on their performance itself.

Conversely, if you are investigating a more complex concept, such as protein stability, then greater understanding is vital, and orthogonal testing is key. Here, the analytical instrumentation expert understands best the merits and limitations of the technology, but the researcher knows their sample and what information is most useful, so collaboration is vital. Interaction with applications specialists can be incredibly helpful, and the best instrumentation companies invest heavily to develop relevant in-house expertise. However, embedding expertise in instrumentation software is also an important and growing trend that has the potential to greatly enhance the support available to industrial users at the benchtop.

Tremintin (Bruker): It is the responsibility of the instrument manufacturers to deliver companion software solutions that harness the hardware capabilities and enable the users to gain the insights they need. For example, the recently released BioPharma Compass 2.0 software takes high-resolution protein measurements and translates them to actionable information such as a glycosylation profile or degradation levels. Similarly, the matrix-assisted laser desorption/ionization (MALDI) PharmaPulse solution takes 100,000s of measurements and derives possible active ingredients against a defined substrate. This substantially improves the library screening approach by providing high throughput while not requiring any labels.

Gendeh (Shimadzu): Analytical instrument companies such as Shimadzu continuously innovate not only new analytical instruments but also complete solutions that solve challenging real-world problems. Many of the early product and solution conceptualization and development are driven through partnership and collaboration with the industry. These new solutions are initially used by the industry partners or collaborators, who are often people that clearly understand the technology, the results, how to interpret the data, and more importantly, the value the data brings to their work. Over time, these new solutions are improved and packaged into ‘analyzers’ for the mainstream users. As an example, Shimadzu recently released one such ‘complete analyzer’ solution for the biopharmaceutical industry. The Shimadzu Cell Culture Profiling ‘analyzer’ combines optimized and validated UHPLC coupled with a triple quadrupole mass spectrometry method to simultaneously analyzes 95 compounds and metabolites in cell culture supernatant. The multi-parameter data from the LC/MS/MS platform is crucial in the cell culture process development and media optimization to promote cell growth; rebalancing media components and quantities by introducing new media components at variable levels to support continuous manufacturing; to monitor cell development to determine optimal harvest endpoints; or when it comes to development of cost-effective media.

D’Silva (Thermo Fisher Scientific): Traditionally, trying to understand a certain aspect of a complex biologic would require various techniques and painstaking analysis of any data-once you’d managed to acquire it, that is. Ensuring that manufacturers meet the ever increasing challenges that their customers face requires them to listen and develop instrumentation that simplifies analysis, alongside impactful software that drives scientists to their ultimate goal: results.

The Thermo Scientific Q Exactive BioPharma platform, for example, allows scientists to analyze complex biotherapeutics using a single system: from native, intact, and sub-unit mass analysis through to peptide mapping, with minimal training and maintenance. Combined with Thermo Scientific BioPharma Finder integrated software, you have a data collection and interpretation workflow that streamlines scientists’ access to their results, allowing them to make rapid, informed decisions, rather than spend time setting systems up and learning complex software platforms. So, provided scientists have the right tools (no matter how complex they may be), the industry is in good stead to be able to acquire and interpret those all-important results.

Room for improvement

PharmTech: What areas are still lacking that make analytical testing challenging and what developments can we expect to see in this field over the next 5 to 10 years?

Tremintin (Bruker): Where you see the most movement is in terms of sample preparation and data processing. The big changes will be bringing more automation to streamline the sample preparation and make it more consistent and less dependent on the skill of the operators. On the back end, it would be to have smarter software that is able to process data faster, more effectively, thus allowing companies to compare larger data sets and derive trends and have feedback on the processes based on analytical results. Additionally, chromatography remains a challenge for some compounds, and so the improvements in ion mobility will add an additional dimension of separation that can help solve problems that challenge conventional chromatography techniques. For example, the Bruker trapped ion mobility separation (TIMS) has enabled the separation of isomers that until now could not be separated.

Gendeh (Shimadzu): Biologics are large and complex molecules, and sample preparation for their characterization and bioanalysis (e.g., absorption, distribution, metabolism, and elimination [ADME]/toxicology) has always been a challenge. We can expect to see more innovation and advancement not only in the automation but also in innovative chemistries to simplify the sample preparation workflows. The Perfinity platform from Shimadzu is one such example that fully automates tryptic digestion and brings sample preparation online with LC-MS. Shimadzu also recently introduced a nano-technology based antibody bioanalysis sample preparation solution called the nSMOL Antibody Bioanalysis Kit. This kit performs selective proteolysis of the antibody Fab region and greatly simplifies and streamlines antibody bioanalysis sample preparation. We expect to see many more similar innovations in nano-technology and micro-fluidic based sample preparation for the biopharmaceutical industry in the next five to 10 years.

Newey-Keane (Malvern): For biologics, the informational need is still being refined, particularly when it comes to biosimilars and the demonstration of BE; and analytical requirements are evolving in tandem with this. Getting more information from each sample remains crucial as this enables information gathering earlier in the drug development pipeline. Robustly identifying the best candidates as early as possible clearly cuts the time and cost of biopharmaceutical development.

The application of orthogonal techniques is bringing significant challenges in terms of data handling. The quantity of data is one issue, but a more taxing one is how to handle what can appear to be divergent data sets. Software platforms that integrate and rationalize data from an optimal set of techniques will be the way forward.

In terms of online implementation, we already have techniques such as laser diffraction particle size analysis that have successfully completed this transition but, over the coming years, others will follow suit. Such instrumentation will need to deliver exemplary reliability as CM matures, but will also be judged on ease of integration as automated control becomes a more routine feature of pharmaceutical processing.

Cubbon (Thermo Fisher Scientific): The list of molecular characterization assays demanded by regulators for biologic drugs is extensive. Typically, more than 30 individual chromatographic or immunoassays are performed to test for critical quality attributes of drug candidates by biologic manufacturers, which is both labor- and time-intensive.

Over the next five years we will see technologies emerging to amalgamate multiple assays into one and simplify the process. An area where this is already being implemented in the area of multiple attribute monitoring (MAM) using HRAM-a single workflow capable of replacing multiple individual steps. Several companies already have drug candidates in late-stage development using this workflow. In 10 years, this technology will move from a laboratory process off-line, to being beside the bioreactor at/on-line to inform the manufacturer immediately of the structural properties of their candidate molecules.

Wyatt (Wyatt): I believe artificial intelligence (AI) will lend its benefits to our industry in the not-too-distant future. With more data being generated, it may become harder to distill information from the data. AI holds the promise of aiding the data interpretation and becoming wiser, the more data to which it’s exposed.

Articles in this issue

over 8 years ago

Reducing Risks Inherent with Cell-Culture Mediaover 8 years ago

Managing Risk for Biomanufacturing Raw Materialsover 8 years ago

Lonza Expands Capabilities for Drug Product Servicesover 8 years ago

Corden Pharma Facility in Italy is Cleared by FDAover 8 years ago

Cambrex Invests in Analytical Laboratory Expansionover 8 years ago

Thermo Fisher Scientific Acquires Patheonover 8 years ago

Swiss CDMO Expands GMP Manufacturing Capabilitiesover 8 years ago

FDA Releases Supply Chain GuidanceNewsletter

Get the essential updates shaping the future of pharma manufacturing and compliance—subscribe today to Pharmaceutical Technology and never miss a breakthrough.